Overview

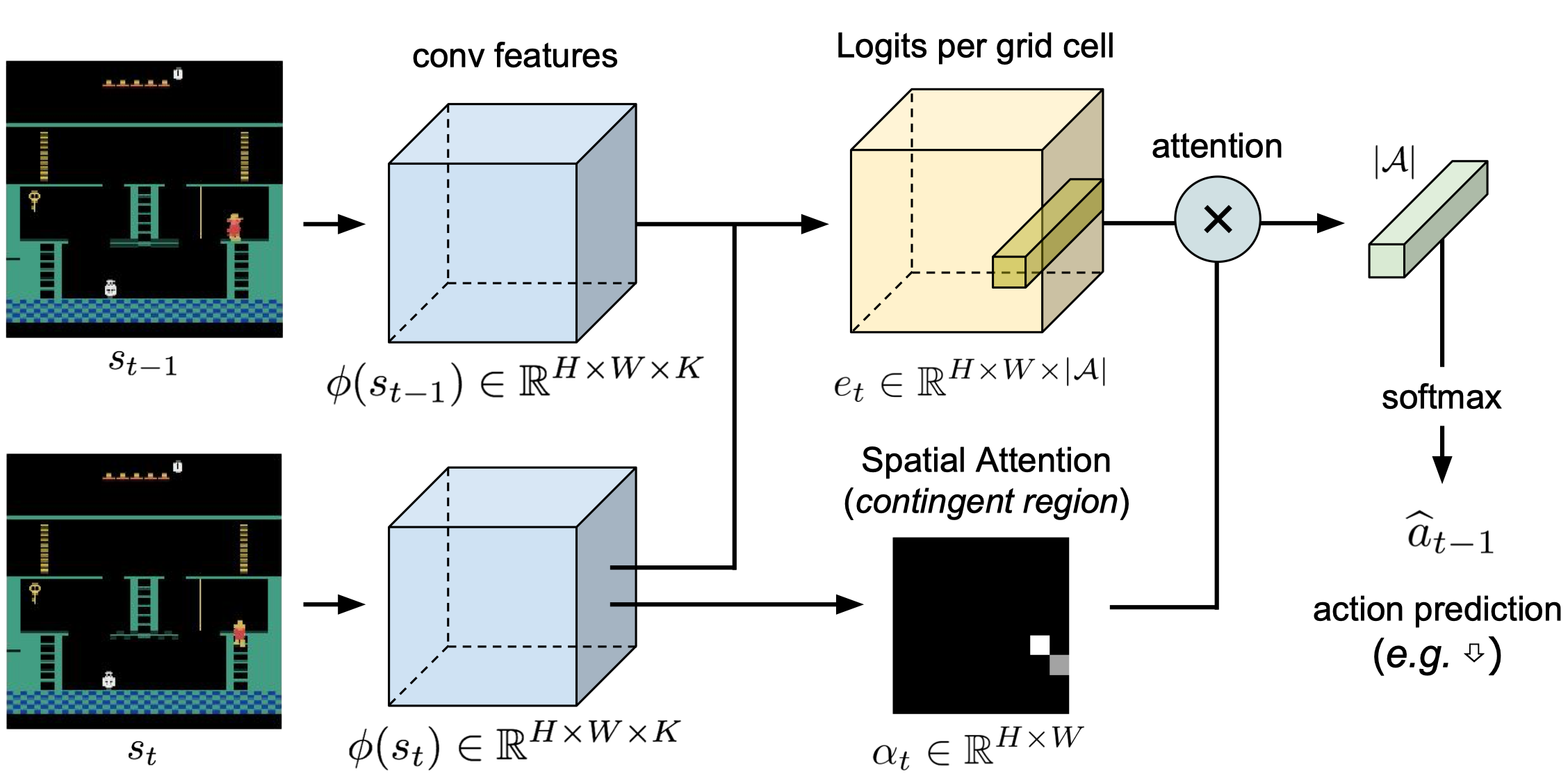

We investigate whether learning contingency-awareness and controllable aspects of an environment can lead to efficient exploration in reinforcement learning. We develop an attentive dynamics model (ADM) that discovers controllable elements of the observations, which can be trained in a self-supervised fashion using the agent's experience.

We combine an actor-critic algorithm with count-based exploration using the discovered contingent regions, achieving strong results in sparse-reward Atari games: for example, we report a state-of-the-art score of >11,000 points on Montezuma's Revenge without using expert demonstrations, explicit high-level information (e.g., RAM states), or resetting to arbitrary states.